Lastest Update:2025/04/11 Chinese Version

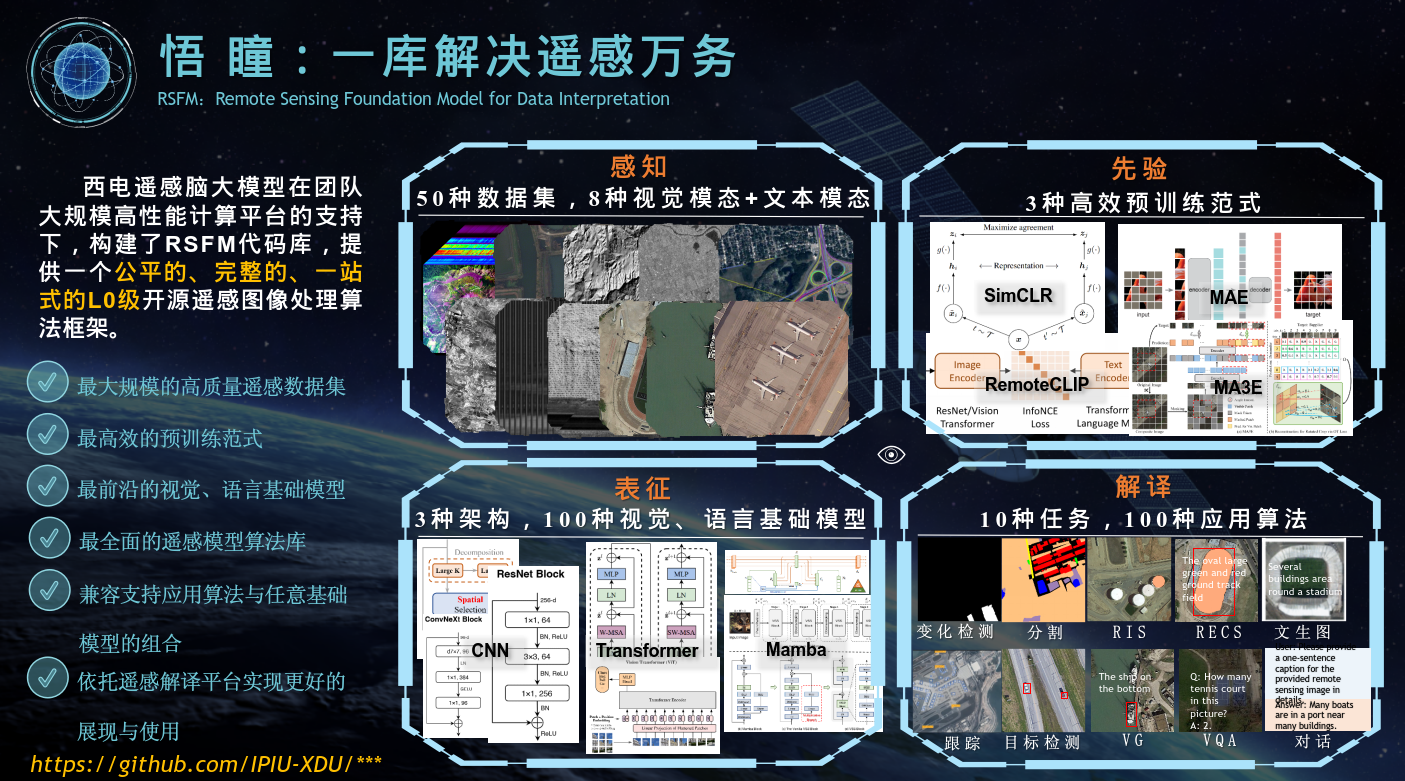

RSFM:Remote Sensing Foundation Model for Data Interpretation

2024.04-Present

To address the challenges in remote sensing, such as task diversity, label scarcity, and modality gaps, our team has developed the RSFM (Remote Sensing Foundation Model) — a unified code framework and foundation model integrating visual and language modalities. RSFM enables versatile task solving across multiple domains under a “one model for all tasks” paradigm.

Highlights:

- Model Diversity: Supports CNN, Transformer, and Mamba-based models ranging from 3M to 30B parameters.

- Unified Task Framework: Covers classification, detection, segmentation, tracking, change detection, VQA, generation, and more — with over 100 algorithms and support for multi-modal learning.

- Rich Data Support: Built upon standardized divisions of 10+ open datasets across multispectral, SAR, and textual modalities, covering diverse scenes like water, vegetation, and urban areas.

My Work Based on RSFM

- Segmentation: Experiments on SegMunich, SPARCS, and 7 datasets using pre-trained SpectralGPT; baseline studies on Potsdam datasets.

- Change Detection: Experiments on LEVIR-CD+.

- Referring Image Segmentation: Using RefSegRS with ConvNext, FocalNet, and InternImage.

- Channel Selection and Repository Optimization for multispectral data.

- Patent Drafting.

- Ongoing Research: Literature review and method benchmarking in referring image segmentation.

Eye-controlled Intelligent Wheelchair

2023.07-2023.11

This is a wheelchair specifically designed for patients with hand and foot difficulties such as ALS.

This wheelchair controls its own movement by collecting information from patients’ eyeballs, thereby solving the problem of difficult travel for patients. At the same time, the wheelchair uses LiDAR to collect surrounding environmental information, and uses differential algorithms to estimate the position of the wheelchair, thereby achieving automatic driving of the wheelchair indoors.

Main functions:

- Eye controlled movement: It can collect information from the eyeballs to control the movement of the wheelchair.

- Indoor autonomous driving: Using LiDAR to achieve autonomous driving indoors.

- Voice and music listening: For patients who are unable to speak, they can use their eyes to control the wheelchair and send customized voice messages, such as “I want to eat” and “I want to drink water”. At the same time, patients can choose the songs to play by moving their eyes.

My job responsibilities:

- Wrote the User Manual: Developed detailed documentation to guide users on how to operate and utilize the wheelchair’s features.

- Optimized Eye-Control Algorithms: Improved the eye-tracking control algorithms, enhancing system responsiveness and accuracy.

- Microcontroller Integration: Successfully connected and integrated microcontrollers, ensuring stable communication between hardware and software.

2002.03

2002.03 Yuncheng, Shanxi

Yuncheng, Shanxi CSDN

CSDN